We post news and comment on federal criminal justice issues, focused primarily on trial and post-conviction matters, legislative initiatives, and sentencing issues.

A CAUTIONARY TALE

You’ve probably heard of artificial intelligence programs – such as ChatGPT – doing all sorts of great things. While inmates can’t get it on their Bureau of Prisons-sold tablets, they might decide to have friends on the street use it for some high-powered legal research.

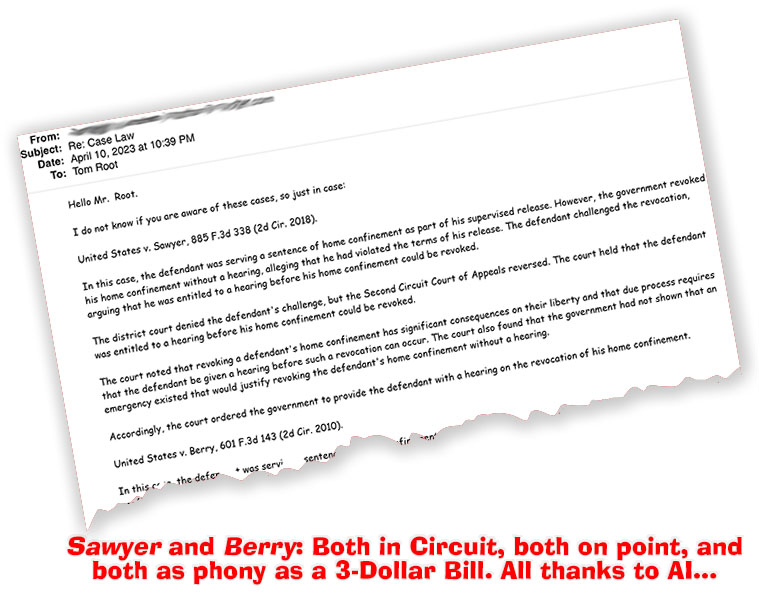

Last week, I was wrestling with a tough habeas corpus issue. Even with a LEXIS subscription, I wasn’t finding much on the topic. A friend interested in the issue sent me an email with two Federal Reporter 3d case citations that were exactly on point.

I was excited and at the same time embarrassed I had not found those cases in my research. I looked up both cases to read the whole opinions, but the citations led nowhere. So I searched the respective circuits by case name but could find nothing.

I was excited and at the same time embarrassed I had not found those cases in my research. I looked up both cases to read the whole opinions, but the citations led nowhere. So I searched the respective circuits by case name but could find nothing.

I contacted my friend for help. He checked the citations himself, and then sheepishly reported to me that they indeed did not exist. He had used Chat GPT to research the issue but had not independently verified the results.

Computer scientists call it ‘hallucinating’. Apparently, when an AI program cannot find the answer someone is seeking, it can make things up. That’s what happened here.

Computer scientists call it ‘hallucinating’. Apparently, when an AI program cannot find the answer someone is seeking, it can make things up. That’s what happened here.

So, a caution: If you run some AI legal research, you may find some really good information. But check every case citation to be sure the case exists and says what the AI is telling you it says.

– Thomas L. Root